| Jan 2017 Issue 3 | ||||||

|

||||||

|

|

| Research and Development | |||||||||

| Sharing Visual Content with Colour Blind Persons | |||||||||

|

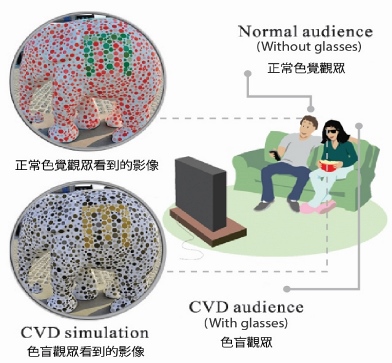

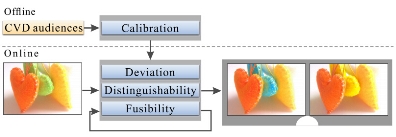

WONG Tien-Tsin, SHEN Wuyao, MAO Xiangyu, HU Xinghong, and LIU Xueting For digital visual content presented via a display, such as TV, movies, and computer games, we may also change the colours in the visual content in order to assist persons with CVD in the discrimination of colours. However, as the colours are changed, the visual content will appear odd and strange when viewed by normal-vision audiences. This also means that normal-vision and CVD audiences may not share the same visual content. Unfortunately, such need of visual sharing is quite common in both home and working environments given the significant population of persons with CVD. To enable visual sharing between persons with CVD and those with normal vision, the colours of the visual content must be altered so that the new colours are discriminable to CVD audiences, while at the same time the colour change remains unnoticeable to normal-vision audiences. This sounds to be impossible to achieve, but it becomes feasible with the addition of a channel on a popular and low-cost stereoscopic display. With stereoscopic displays, different visual contents (left view and right view) can be viewed by different eyes (left eye and right eye). Interestingly, even if both of our eyes are viewing different visual contents, our human vision system is still able to fuse the visual content from both eyes into a single percept via a complex non-linear neurophysiological process [1, 2]. In particular, our binocular vision system can fuse two views with different colours and contrast into a single percept in a non-linear fashion. In other words, given two views that differ in colour and contrast, the visual experience of non-linear binocular fusion of the two views differs from the visual experience of a linear blending of the two views. Therefore, we propose the separate allocation of these two different visual experiences to persons with CVD and to normal-vision audiences. Those with CVD will view the non-linear fusion of the two views, and the normal-vision people will perceive the linear blending of the two views. Our aim is to alter the colours of the contents in the two views so that the non-linear fusion is discriminable to people with CVD and the linear blending is similar to the original image. Figure 1 illustrates the proposed idea and configuration. The major challenge of our design is how to synthesize the two desired views. When given an image with colours that may confuse CVD audiences, we wish to synthesize an image pair (a left image and a right image) that can maximize the colour distinguishability for those in the CVD audience when displayed binocularly, while at the same time, the blending (average) of the left and right images must be equivalent/close to the input image. The synthesized image pair shall then be presented to the audiences via a stereoscopic (binocular) display. Those in the normal-vision audience may simply watch the 2D images without wearing any glasses. In so doing, they are actually perceiving the blended result of the left and right images, which should be quite close to the original image. Those in CVD audience will have to wear stereoscopic glasses to view the binocular fusion of the image pair. Therefore, they will perceive the non-linearly fused result of the left and right images. The making of the colours discriminable in both left and right images will result in the fused colours also being made discriminable to those in CVD audience. In order to synthesize an image pair that maximizes colour distinguishability, we propose a system that consists of an offline phase and an online phase, as illustrated in Figure 2. The offline calibration is a one-off step, and should be performed once for each CVD individual so that the synthesized result can be tailored for him/her according to his/her CVD type and level of severity. During the online recolouring phase, we will formulate the image pair synthesis problem as an optimization problem that simultaneously maximizes the colour distinguishability of the image pair for CVD audiences and minimizes the deviation of the blended image (left+right average) from the input image based on three energy terms: the deviation term, the distinguishability term, and the fusibility term. Our results for various types of images have been validated via multiple quantitative experiments and user studies. Figure 3 shows one such synthetic image pair. The upper row features the input image, the synthesized image pair, and the blending of the synthesized left and right images. The blended result is effectively indistinguishable from the input. The lower row shows the simulation result of the corresponding images when viewed by a CVD. Both the synthesized left and right images have significantly enhanced the colour distinguishability. Our work has been publicized in the academic conference ACM SIGGRAPH 2016, demonstrated to the general public in Electronic Fair 2016 and Innocarnival 2016, and reported by several local media (Figure 4). To date, we have tackled the still images and performed a pilot test on an extension to video. More sophisticated algorithms may be required in order to maintain the temporal coherence of more challenging cases (that may significantly reduce the solution space) and further in-depth evaluation is necessary. This is part of our on-going research. At present, visual attention has not been taken into consideration in the computational model. We believe if said visual attention is taken into account, some of the constraints can be further relaxed, which may lead to an even larger solution space. This study is supported by the Research Grants Council of the Hong Kong Special Administrative Region, under RGC General Research Fund (Project No. CUHK 417913). References: |

|

||||||||

|

|

|||||||||

|

|||||||||

|

|

|||||||||||||||||||||||